April 10, 2020 By BlueAlly

Microservices are quickly changing the face of cloud computing, giving cloud architects the tools needed to move away from provisioning resources statically, such as with servers and virtual machines (VMs). New types of workloads, like serverless and containers, realize greater operational efficiencies, and compute as a service (CaaS) is now more affordable and scalable than ever before.

From a cloud security standpoint, however, microservice architectures require drastically new thinking. For example, DevSecOps practices assume that infrastructure is being deployed to the same resilient and secure endpoints, but with serverless, everything is in flux: An IP address associated with an S3 bucket today could be subsequently associated with an orchestrator or a load balancer within minutes after the S3 bucket stops using it. The runtime image or workload running the image itself becomes the security perimeter, and the cloud security approach taken needs to reflect this in order to secure the system as a whole.

To achieve this, it’s vital to understand all of the moving parts. In this article, we will discuss how providing workload security at various layers can ensure that microservice architectures remain as secure as possible—even before the first Kubernetes execution.

Serverless and Container Components

Before discussing the challenges, we need to better understand the components that make up both serverless and container workloads and the security needs. From a cloud security standpoint, for both serverless and container-based architectures, one cannot apply traditional security methodologies, as each pose inherent differences. With these new workload architectures, there are no longer static servers with a fixed IP running the infrastructure and applications as an indivisible unit.

Instead, serverless is an execution-based architecture that runs application code on demand—automatically building out infrastructure whenever the function (e.g., an AWS Lambda) is invoked.

Needless to say, serverless doesn’t mean that no servers are involved.

Rather, it means that the servers used to provide the computing the user requires update dynamically. While this may save system admins great headache by not having to think about infrastructure, it creates a nightmare for cloud security practitioners as they quickly lose visibility and control.

In comparison, container-based architectures aim to increase the efficiency of current server models, particularly virtual machines, by abstracting the application layer from the host environment within which it runs. It also separates it out into its constituent parts so that it can be moved among infrastructure much more efficiently. These components are:

- Container images: These contain the basis for a typical cloud application, such as a web server. They contain all the necessary dependencies and binaries to provide services. Like a virtual machine (VM) image, they are stored as a file.

- Docker images: These contain the container plus the runtime necessary to allow it to compute and function.

- Kubernetes: These are the automation orchestrators that handle the work of automatically provisioning new server infrastructure in order to provide adequate computing resources to support the required task. Kubernetes can handle deployment onto any kind of server—whether an EC2 instance or bare metal housed on-premises.The atomic Kubernetes unit is known as a “pod” and the server which it provisions infrastructure upon is known as a “node.” Each pod is tied to a node upon scheduling, and if it fails, the pod is simply pushed to a different node on the cluster. Kubernetes are elastic, allowing for automatic scaling of resources as use cases require. But unlike other elastic services, such as Amazon ECS, Kubernetes can provision server resources on any cloud environment—or on on-premises servers. Like Lambda functions, Kubernetes are the orchestration engines that allow the process to run by themselves.

Cloud Network Security Challenges for Microservices

Let’s now dive into more of the challenges. Cloud security leaders need to think creatively when working to secure serverless and container deployments as the entire design of the applications are fundamentally different.

- For instance, the network perimeter in the cloud is now the workload itself. DevSecOps practices that rely on a traditional fixed perimeter, based on server IP addresses, are not suitable for securing these deployments.

- For added complexity, organizations that use function-as-a-service (FaaS) providers, such as AWS Lambda, now need control hundreds of event inputs and potential blind spots happening downstream of an initial function call. This makes controlling permissions and access groups extremely important, particularly as the infrastructure scales. Organizations need to understand the role and requirements of each function or container component and design permissions around those requirements. Only then can they ensure that proper controls and configurations are in place.

- Visibility is also a challenge. With hundreds if not thousands of assets it is difficult for cloud security leaders to have control over what is happening across their workloads and it makes manually remediating issues a challenge if not impossible.

With these challenges in mind, we now must look at ways to address them, so organizations can achieve the benefits of operational efficiency and speed to market without impacting security or compliance.

Layered Security Is the Answer

Check Point’s philosophy for securing microservice architectures and cloud workloads centers around securing the stack at five levels. These are:

- Posture hardening/vulnerability management: This takes a proactive approach to ensure that unpatched vulnerabilities and unsafe permission structures never even make it to a staging environment.

- Cloud network security (microsegmentation): This creates network security within the Kubernetes itself.

- Workload runtime protection: This assesses the workloads themselves to create baseline behavioral states at the application layer to determine unexpected behavior.

- EndPoint/cloud detection & response (EDR or CDR): An intelligence layer identifies suspicious traffic being served.

- Malware scanning: Finally, the codebase is continuously scanned for malware.

Posture Hardening

Check Point supports cloud architects looking to take preventative measures to prevent unsafe server configuration from making it into production, even in CI/CD workflows. To achieve this, Check Point CloudGuard security gateway uses the CloudGuard Dome9 Governance Specification Language (GSL) syntax to inspect and run tests on compliance settings.

Check Point Dome9 has developed a rich library of cloud governance and compliance rules (e.g., PCI, HIPAA, NIST, CIS, etc.) for major cloud providers, including AWS, Azure, and Google Cloud. CloudGuard Dome9 allows users to build test queries and then checks them against regulatory and compliance best practice models.

For instance, when scanning a user’s suggested AWS Lambda functions, CloudGuard checks that the function has been assigned tags, keys, and values. If it has not, it will flag why the function was not compliant and provide a detailed output to show the user exactly why the function was determined unsafe.

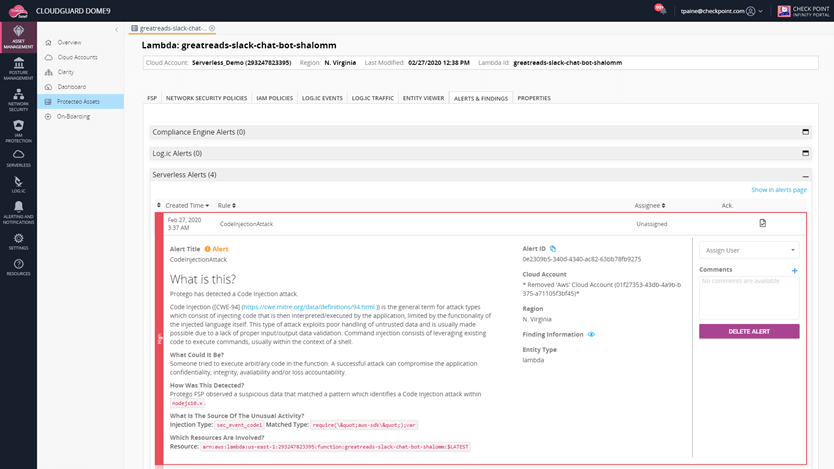

Figure 1: CloudGuard flagging incompliant functions

Additionally, CloudGuard provides continuous vulnerability scanning to ensure that items such as access keys have not accidentally been left accessible within the source code.

A recent data breach in Israel resulted in the exposure of the entire national voting record as the result of an access key left in the source code. This case underscores why pre-deployment code-base scanning is vital.

CloudGuard can be configured to automatically generate appropriate permission sets for identity access management (IAM), across all cloud providers, based on the detected environment. CloudGuard can do this where one has not been set or an inappropriate one—such as “allow all access”—has been configured.

After integration, CloudGuard continuously scans the live cloud environment. Notifications can be configured so that administrators are immediately notified when rulesets fail validation. By ensuring that no internal S3 buckets have been misconfigured to allow public access or raw credentials were not left in the source code, this basic level of posture hardening can significantly reduce the risk caused even by successful breaches.

Cloud Network Security

Next, CloudGuard has developed the ability to natively detect internal serverless and container components, including Kubernetes, which it detects as first-level objects. CloudGuard then ensures that an appropriate firewall rule is configured at the perimeter of the code base.

CloudGuard Dome9’s Clarity functionality provides users with a visual overview of their entire cloud infrastructure account and allows users to gain an understanding of how internal cloud microsegmentation works. Within each element of the cloud network, users can inspect the security groups that have access to resources, and inspect the inbound and outbound firewall rules to see if they have been properly configured.

Workload Runtime Protection

In order to provide strong runtime security and application control within the workload, CloudGuard determines an expected baseline behavior at the application layer—investigating what each component of the architecture does and then providing detection and security based on that.

By looking at how individual components—such as an S3 bucket—access files, invoke sub-processes, and connect with other resources, CloudGuard builds entity-specific whitelists individualized to the normal understood workloads of specific resources. Compared to the traditional approach of looking at cloud infrastructure as a monolith, and building baselines upon that, Check Point’s approach allows for far more granular application and runtime security.

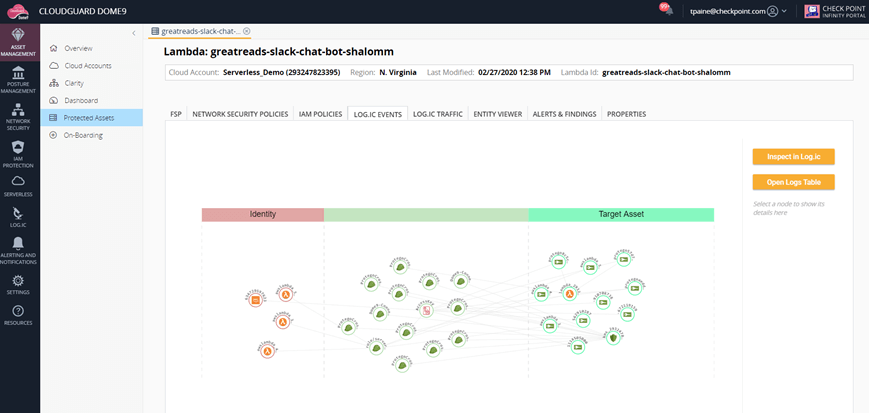

Based on this discovery process, CloudGuard provides visualizations of workload components—in this case serverless—displaying individual functions, such as an AWS Lambda function, and mapping their normal expected workloads and processes. The diagram includes connections to other resources and explains what their purpose is.

Figure 2: CloudGuard visualization of serverless components

Endpoint/Cloud Detection and Response (EDR or CDR)

Finally, endpoint detection and response (EDR) and network traffic analysis (NTA) provide forensic and troubleshooting to detect malicious behavior that has not been detected by the previous three layers. Unlike the first three security pillars, these monitor the architecture’s behavior holistically and externally, as a whole.

CloudGuard LOG.IC understands the normal output of the cloud and investigates suspicious activity, both in the network DMZ and in the external zone. This information is provided by a network map. CloudGuard LOG.IC can also automatically understand and parse the moving parts of cloud infrastructure, displaying when an ephemeral Lambda function was created, what it created, and what infrastructure it created that exists now, for instance.

Malware Scanning

As part of its threat prevention protocol, CloudGuard continuously scans the function during runtime to identify malware and other security risks that could jeopardize the security and compliance posture of the application. CloudGuard uses detailed threat analysis to automatically identify threats from third-party and internal resources to identify and block the attack.

CloudGuard Log.ic integrates with Check Point ThreatCloud and flags potentially malicious connections from serverless components to known hostile entities, such as servers known to be used for malware injection. This information can be used to automatically harden firewall rules and protect the network at a lower level.

To Secure Microservices, Think Holistic Workload Security

Check Point’s workload security solution, with cloud posture management, is based on a holistic understanding of serverless and containers that both intelligently understands the function of the “moving parts” and secures them as a whole at various levels. Those deploying this new means of computing can move away from understanding the system as a monolith and leverage microsegmentation and application-level runtime security to better protect their infrastructure from threats.